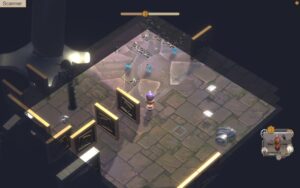

My game “Lillie is the Keeper” needed a small-scale ripple texture for an ocean shader. The in-game shader makes use of a 3d texture, UV sampled in world space horizontally, with the sampler moving up through the texture’s z axis over time to animate it. A first version used the old 4d rotation trick for repeating noise, in which a 4-dimensional noise texture is rotated 360 degrees around the Lovecraftian W axis between UVs 0 and 1. It tiled horizontally, but when the 3d texture repeated (up the z axis) there was an ugly little crossfade between unrelated frames.

I use Bforartists, a UI-focused fork of Blender, for 3d graphics and some texture creation–like this project. It doesn’t fix every pain point, but I can’t recommend it highly enough. This method, and the attached .blend file, will work just the same in mainline Blender.

As there’s no 5d noise function in the shader nodes (Shading tab), for the improved ripple texture we must go back to fundamentals. Ken Perlin’s original version of a solid texture–Perlin Noise–has a lot of complicated math behind it, but geometrically is pretty straightforward: Create a 3d grid, place a point at a pseudorandom location within each box, and apply a function to smoothly interpolate between the points in all three dimensions. (As best I understand it, Perlin’s own improvement, Simplex Noise, replaces the grid with tetrahedrons–triangular pyramids–but that’s at least Whisperer-in-Darkness-grade math for me.)

There is a shader node that can perform similar interpolation: Point Density. Note that you’ll have to switch your renderer to Cycles in order to use it. In Eevee it’ll just output black. This is poorly documented and the interface won’t help you.

The Point Density node takes the vertices of a mesh (or particles of a particle system) and outputs a greyscale representation of their density. With it, it’s possible to create noise from your own handmade 3d grid of points–like an array of cubes. Since you’re creating the vertices yourself, making them repeat is as simple as replicating them in x, y and z–for instance, with three Array modifiers.

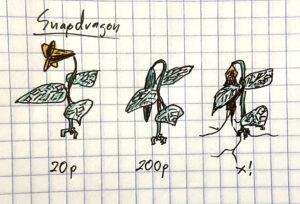

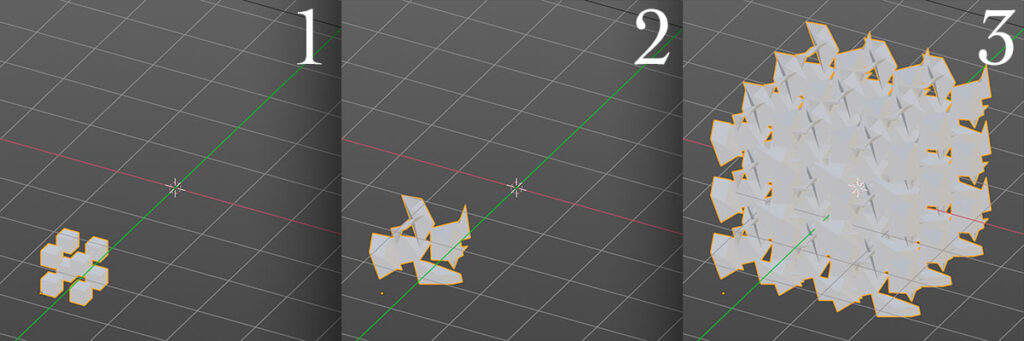

To start out, create a 1x1x1 cube. Pop into Edit Mode and set the cube’s origin to its leftmost, bottom-most, hindmost vertex. Back in Object Mode, move it to -1.5, -1.5, -1.5. Just one cube (8 points) won’t look like much as noise, so we’ll double it in all three dimensions. Scale your cube to 0.5, 0.5, 0.5. Now double it in X, Y and Z with three Array modifiers: Add an Array modifier, set the Count to 2, and the Relative Offset’s Factor X to 2. Add two more Array modifiers, for the Y and Z axes (Relative Offset Factor Y to 2 on the second, and Z to 2 on the third).

We can randomize our cubes’ vertex positions with another modifier: Displacement deforms the mesh based on a texture. The app can generate this noise texture for you. Go to Texture Properties, create a new Texture, set the type to “Clouds,” and select “Color” rather than “Greyscale.” Go back to your cubes’ modifiers, add a Displacement modifier (after the three Array modifiers), set the Coordinates to “Global,” the Direction to “RGB to XYZ” and the space to “Local.” Play with the Strength and Midlevel properties if you want more distortion in your cubes.

Now you’ve got a box of eight distorted cubes, sort of down in the lower left-hand corner of the world axis. Let’s replicate them with three further Array modifiers: After your Displacement modifier, add an Array, and set the Count to 3. Since the Displacement modifiers are messing with the overall dimensions of the cubes, deselect Relative Offset and select Constant Offset. Set Distance X to 2. Now, make two more Array modifiers, for Y and Z, also with Constant Offset Distance 2 (in Y and Z respectively). You should now have a repeating set of distorted cubes in all 3 dimensions.

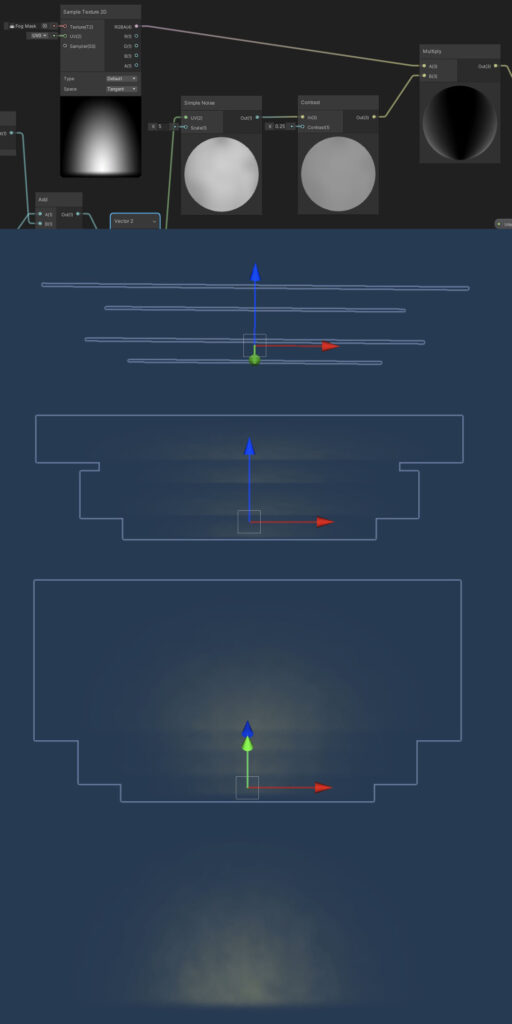

Hide your cubes (including from renders–the camera icon in the outliner). Create a 1×1 plane at the origin. Delete any lights in your scene. Set the camera’s output to a texture-friendly square, like 512×512 pixels (printer icon, Resolution). Set the camera to Orthographic (camera icon, Lens: Type) and aim it straight on to your plane. Create a new Material on the plane (material ball icon, New).

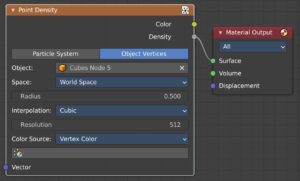

Switch to the Shading tab with your new Material, delete the “Principled BSDF” node, and instead add a “Point Density” node. Select “Object Vertices” rather than “Particle System.” Under Object, select your mesh of repeating distorted cubes. Set the Space to “World Space,” the Radius to 0.5, and the Interpolation to “Cubic.” Drag the node’s Density output straight to the Material Output node’s Surface input.

That’s it, in a nutshell. Move your plane between -0.5 and 0.5 Z, and the noise pattern will repeat. It’ll also wrap around at the X and Y edges.

You can create finer-grained noise by doubling your cubes and halving their scale. Or double-doubling and half-halving. Or double-double-doubling… You get the idea. For each iteration, half the scale, double the Count of your first 3 modifiers, then double the distance between them with your last 3 modifiers. I also recommend halving the Size attribute of your Clouds texture each time.

Note that at larger numbers of cubes (say 16 or 32 per box) the “Point Density” node’s Resolution attribute will need to be increased. Add 100 at a time, until there’s no visible difference adding 100 more. Accept the hit in performance. (If you see seams in the final rendered texture, too low a Resolution setting here is usually the culprit.)

Download the Bforartists/Blender file here: Repeated Tiled Noise v2.blend

In the file, the Demo collection will demonstrate the simple version, while the Production collection is my final water ripples setup. In the simple version, there are also some unused shader nodes demonstrating a setup for combining noise at different scales to create more complex output–again, just like Perlin Noise.

The Production collection, my own version for water ripples, does a few additional things. I’m creating the 3d texture in Unity, which requires each frame (vertical slice of the 3d noise) to be stacked side-by-side in a single image file. As such, I’ve added an Array modifier to the plane I’m rendering, so that it becomes 16 side-by-side squares stepping up between -0.5 and (almost) 0.5. (Almost 0.5, because step 17 would be 0.5–and we don’t want to repeat a frame. The app will do basic math when setting fields numerically, so entering “1/16” will give you 0.0625…) The orthographic camera is adjusted to render it all in one frame. Within the shader graph, I’ve made the position Vector fed into the “Point Density” node a combination of the world Z coordinate and the planes’ UV coordinates (standing in for X and Y). Since I want a lot less change as the noise loops in the Z axis than in the horizontal directions, I’ve not halved the number and scale of the cubes along the Z axis, and I’ve split the Displacement modifiers’ textures into three different Greyscale textures accordingly. There’s an RGB Curves node added after the Point Density node to make the interpolation more wave-like. Finally, the greyscale heights have been translated into a Normal Map via a Bump node.

Smooth sailing!